Safeguarding Sensitive Files and Detecting Unauthorized Access on S3 Bucket Storage

Amazon Simple Storage Service (S3) is a widely used object storage service, but ensuring the security of your S3 bucket is crucial to protect sensitive data. In this guide, we will walk through the steps to automatically detect unauthorized access to your S3 bucket when accessing our secrets in a special file ( secrets.txt )

Playbook creation

To help us quickly build the environment, we are going to use Ansible along with a Cloudformation template to create and set up the initial needed environment.

A linux machine with Ansible installed is required. We will also need the Amazon Web Services (AWS) software development kit (SDK) for Python (boto3), which allows Python developers to write software that makes use of services like Amazon S3 and Amazon EC2.

Let’s start by creating a directory structure for our files using the command below:

mkdir -p detectionlab/vars detectionlab/files detectionlab/template

cd detectionlabNow, we will be creating an Ansible Vault to store our AWS access key and secret key credentials.

Ansible Vault encrypts variables and files so you can protect sensitive content such as passwords or keys rather than leaving it visible as plaintext in playbooks or roles.

You will be prompted to create a password while creating the vault, and it is critical to remember the password.

ansible-vault create vars/vault.ymlThe content of the vault should be in the format below. Substitute ACCESSKEY and SECRETKEY with the appropriate information for your environment.

aws_access_key: ACCESSKEY

aws_secret_key: SECRETKEYUsing Your Favorite File Editor, let’s create our ansible playbook “playbook-creation.yml” with the content below:

#

# - Create a stack and rollback on failure

# - Upload the secret file

#

---

- hosts: localhost

connection: local

vars_files:

- vars/vault.yml

vars:

- build_name: ansible-cloudformation

- bucket_name: sensitive-bucket-demo

- secret_filename: secrets.txt

tasks:

- name: Get the summary information if the stack already exist

cloudformation_info:

aws_access_key: "{{ aws_access_key }}"

aws_secret_key: "{{ aws_secret_key }}"

region: "us-east-1"

stack_name: "{{ build_name }}"

all_facts: yes

- name: Create our cloudformation stack if it doesn't exist

cloudformation:

stack_name: "{{ build_name }}"

aws_access_key: "{{ aws_access_key }}"

aws_secret_key: "{{ aws_secret_key }}"

region: "us-east-1"

state: "present"

template_body: "{{ lookup('template', 'cloudformation.j2') }}"

on_create_failure: DELETE

tags:

Stack: "{{ build_name }}"

when: cloudformation[ build_name ] is undefined

- name: Upload out secret file

aws_s3:

aws_access_key: "{{ aws_access_key }}"

aws_secret_key: "{{ aws_secret_key }}"

bucket: "{{ bucket_name }}"

object: "/{{ secret_filename }}"

src: "files/secrets.txt"

mode: put

permission: [] Now, we can create the cloudformation template that will be used by our ansible playbook.

Using your favorite file editor, let’s go ahead and create the file “templates/cloudformation.j2” with the content below:

AWSTemplateFormatVersion: 2010-09-09

Resources:

LambdaRoleID:

Type: 'AWS::IAM::Role'

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service:

- lambda.amazonaws.com

Action:

- 'sts:AssumeRole'

Path: /

Policies:

- PolicyName: LambaSecurityHubPutFinding

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action: 'securityhub:BatchImportFindings'

Resource: '*'

- Effect: Allow

Action:

- "logs:CreateLogStream"

- "logs:PutLogEvents"

Resource: !Join

- ''

- - 'arn:aws:logs:'

- !Ref AWS::Region

- ':'

- !Ref AWS::AccountId

- ':log-group:/aws/lambda/SecretFileDectection:*'

- Effect: Allow

Action: 'logs:CreateLogGroup'

Resource: !Join

- ''

- - 'arn:aws:logs:'

- !Ref AWS::Region

- ':'

- !Ref AWS::AccountId

- ':*'

LambdaFunctionID:

Type: 'AWS::Lambda::Function'

Properties:

FunctionName: SecretFileDectection

Code:

ZipFile: |

import boto3

import re

def lambda_handler(event, context):

# Get IP version

if bool(re.search("\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}", event['detail']['sourceIPAddress'])):

ipver = "SourceIpV4"

else:

ipver = "SourceIpV6"

# Get Account ID

client = boto3.client('sts')

response = client.get_caller_identity()

acctId = response['Account']

print(acctId)

# Check if assumed role and set userName

if event['detail']['userIdentity']['type'] == "AssumedRole":

userName = event['detail']['userIdentity']['sessionContext']['sessionIssuer']['userName']

elif 'userName' in event['detail']['userIdentity'].keys():

userName = event['detail']['userIdentity']['userName']

else:

userName = event['detail']['userIdentity']['arn']

# Write finding

client = boto3.client('securityhub')

client.batch_import_findings(

Findings = [{

"AwsAccountId": acctId,

"CreatedAt": event['detail']['eventTime'],

"Description": "Sensitive file used",

"GeneratorId": "custom",

"Id": "sensitive-file-" + event['detail']['sourceIPAddress'],

"ProductArn": "arn:aws:securityhub:us-east-1:" + acctId + ":product/" + acctId + "/default",

"Resources": [

{

"Id": "arn:aws:s3:::" + event['detail']['requestParameters']['bucketName'] + "/" + event['detail']['requestParameters']['key'],

"Partition": "aws",

"Region": "us-east-1",

"Type" : "AwsS3Bucket"

}

],

"Network": {

"Direction": "IN",

ipver: event['detail']['sourceIPAddress']

},

"SchemaVersion": "2018-10-08",

"Title": "Sensivite File Used",

"UpdatedAt": event['detail']['eventTime'],

"UserDefinedFields": {

"userName": userName,

"eventName": event['detail']['eventName']

},

"Types": [

"Effects/Data Exfiltration",

"TTPs/Collection",

"Unusual Behaviors/User"

],

"Severity": {

"Label": "CRITICAL",

"Original": "CRITICAL"

}

}]

)

return {

'statusCode': 200,

'body': "Success!"

}

Handler: index.lambda_handler

Runtime: python3.9

Role: !GetAtt LambdaRoleID.Arn

SensitiveDataBucket:

Type: 'AWS::S3::Bucket'

Properties:

BucketName: {{ bucket_name }}

CloudTrailLogBucket:

Type: 'AWS::S3::Bucket'

Properties:

BucketName: cloudtrail-logs-{{ bucket_name }}At last, we will create the secret file that we want to monitor using the command below:

echo "username: admin " > files/secrets.txt

echo "password: password" >> files/secrets.txtWe should now have the needed files and templates to use with Ansible in order to create our AWS environment.

Running the playbook will prompt you for the password you entered while creating the vault in the previous step.

ansible-playbook --ask-vault-password playbook-creation.ymlThe playbook will do the following for us:

- Create the IAM Role that would allow to us perform the SecurityHub BatchImportFinding action

- Create the lambda function that will be triggered by an event

- Create the S3 bucket where we will upload our secret file and a bucket for CloudTrail logs

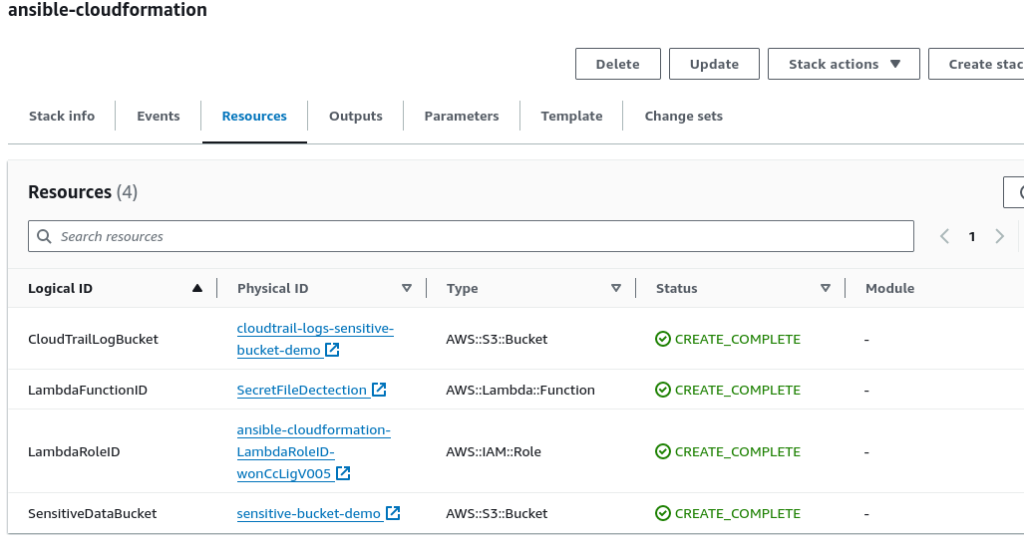

When the playbook is finished running successfully, the resources created in the CloudFormation “ansible-cloudformation” should like the screenshot below:

Implementing AWS CloudTrail

AWS CloudTrail is a service that provides detailed logs of activities and events within an AWS account. It essentially tracks and records every API call and action taken by various resources and users within the AWS environment. These logs include information such as who performed the action, which resources were involved, and when the action occurred.

We’ll activate AWS CloudTrail to record all management events (API calls) and S3 data events for the ‘sensitive-bucket-demo‘ in our AWS account. Additionally, it will track any events related to accessing our ‘secrets.txt‘ file.

- Navigate to AWS CloudTrail in the Management Console.

- Create a new trail.

- Provide a Trail name: “sensitive-bucket-management-events”

- Select “Use existing s3 bucket” for storage location

- Browse and select “cloudtrail-logs-sensitive-bucket-demo” in the Trail log bucket name

- Provide new AWS KMS alias

- Click Next

- In the “Events” section, make sure “Data events” is selected

- Switch to the basic event selectors and the click continue

- In the “Data events” section:

- Uncheck “read” and write for all current and future s3 buckets

- Click browse and select the s3 bucket “sensitive-bucket-demo“

- Click Next and then click on Create Trail

We should now have a new trail.

Setting up Amazon EventBridge

Amazon EventBridge is a serverless event bus service that simplifies the management and routing of events across AWS services.

We will use it to setup rules that will trigger our lambda function and send the information to the SecurityHub

- Navigate to Amazon EventBridge in the Management Console

- Create rule

- Provide a name for the rule: “sensitive-data-rule“

- Select “Rule with an event pattern”

- Click Next

- Select “AWS events or EventBridge partner Events” in the Event source section

- In the Event Pattern section:

- Select “Simple Storage Services(S3) in the “AWS service” drop down list

- Select “Object-Level Api Call via CloudTrail” in the Event type

- Click on Edit partern

- Replace the custom pattern (JSON) with the content below

- Updated Event pattern rule

- Updated Event pattern rule

- Click next

- Select Lambda function for the Select a target drop down list

- Select “SecretfileDectection” in the Function drop down list

- Click next

- Click next

- Click Create rule

Simulating access and Checking Findings

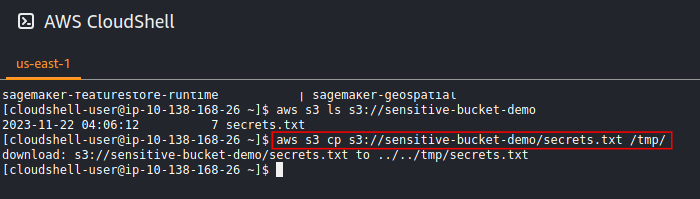

The quickest way to test that our triggering rules are working is by leveraging AWS CloudShell to try downloading a copy of our secret file.

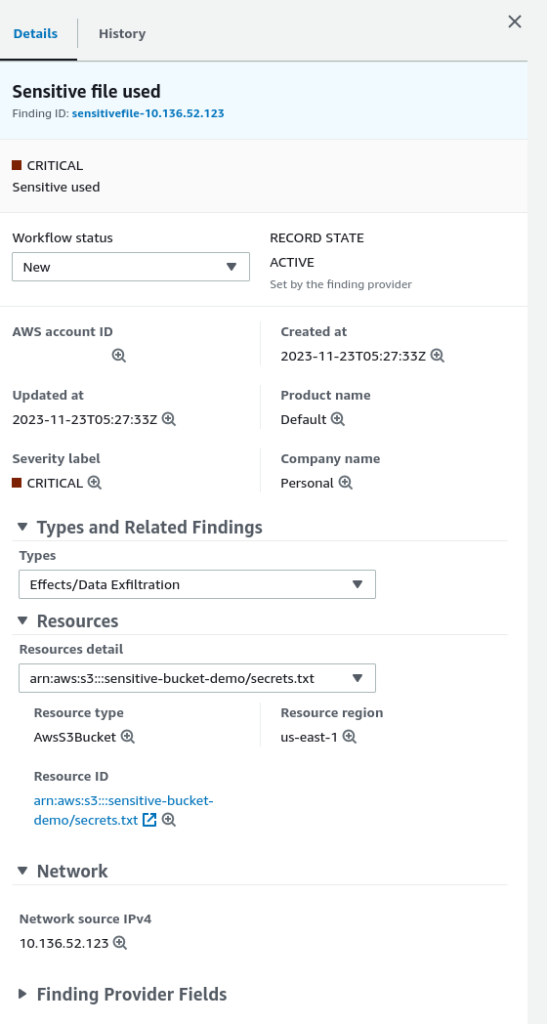

Let’s now jump to the SecurityHub Console and go to the Findings. We should now see events triggered when the secret file was downloaded.

As you can see with the screenshot above, accessing our sensitive bucket triggered our lambda function, which in turn sent the associated information to SecurityHub.

Conclusion

By implementing robust measures such as setting up fine-grained access permissions, configuring detailed logging, and utilizing AWS Security Hub with custom Lambda functions, you’ve empowered your infrastructure to safeguard sensitive files like ‘secret.txt’.

Remember, security is an ongoing journey; staying vigilant, regularly reviewing security configurations, and adapting to emerging threats will ensure a resilient defense against unauthorized access, protecting your invaluable data assets in the ever-evolving digital realm.

If you have any questions or require assistance, we are actively seeking strategic partnerships and would welcome the opportunity to collaborate. Don’t hesitate to contact us!